S3 part2

Class 36th AWS S3 part2 May 31st

- Version S3

- Storage Classes in S3

- Lifecycle management in S3

- S3 Transfer Acceleration (S3TA)

- Working with S3 using command line interface -CLI (assignment)

- Versioning in Amazon S3 is a means of keeping multiple variant of an object in the same bucket.

- You can use the s3 versioning feature to preserver,retrieve,and restore every version of every object stored in your buckets.

- With versioning you can recover more easily from both unintended user actions and application failures.

- After versioning is enabled for a bucket,if amazon s3 received multiple write requests for the same object simultaneously, it stores all of the objects

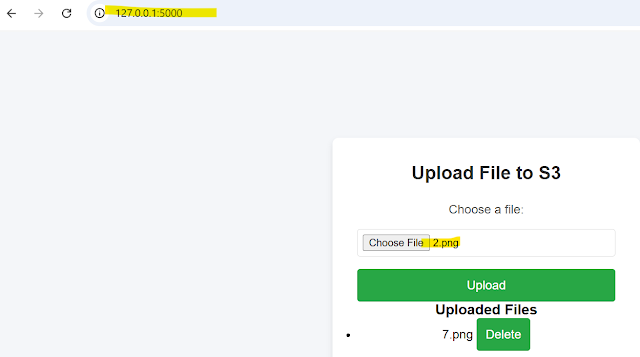

Practical

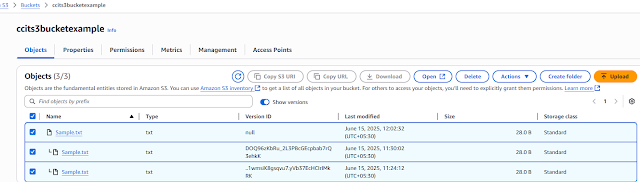

Step1: Create one Sample bucket enable bucket version "ccits3bucketexample" click create bucket.

Adding uploaded one sample.txt file ,with below context

Subbu S3 Bucket Versioning 1

After Change second time ,uploaded ,see below screen shoe show version was showing two files

Subbu S3 Bucket Versioning 2

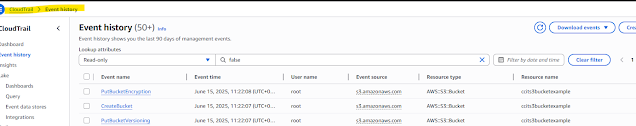

Delete the file, After deleted one delete mark added to the fileAfter the operation you have performed everything tracked in the cloud trail event history, track will be available 90 days.

Storge Classes:

- Used to frequently accessed data

- Files store in multiple Azs.

- 99.9999999999 of durability

- Default storage class

- Only Storage charges applicable

- No minimum duration

- No minimum size

- Fast Accessing

- Used to infrequently accessed data

- Files store in multiple Azs.

- 99.9999999999 of durability

- Fast accessing, But Cheaper than standard

- Storage and retrieval charges applicable

- Storage charges with minimum 30days duration

- minimum size charge 128kb

- Best suitable for the long live data (30 days)

- Suitable for infrequently accessed data

- Files store in one Azs.

- 99.9999999999 of durability

- Cheaper than standard -IA

- Storage and retrieval charges applicable

- Storage charges with minimum 30days duration

- minimum size charge 128kb

- Best suitable for the second backup storage

- Used to frequently accessed data

- Files store in multiple Azs with immediate retrieval.

- 99.9999999999 of durability

- Cheaper than the frequently and infrequently storage type

- Storage and retrieval charges applicable

- Storage charges with minimum 90days duration

- minimum size charge 128kb

- Best suitable for the long live data (90 days)

- Used to frequently accessed data

- Files store in multiple Azs & retrieval time min to hrs

- 99.9999999999 of durability

- Cheaper than the Glacier instant retrieval

- Storage and retrieval charges applicable

- Storage charges with minimum 90days duration

- minimum size charge 40kb

- Best suitable for the long live data (90 days)

- Used for infrequently and long live data

- Files store in multiple Azs & retrieval in Hrs only

- 99.9999999999 of durability

- Cheaper than the above classes

- Storage and retrieval charges applicable

- Storage charges with minimum 90days duration

- minimum size charge 40kb

- Best suitable for the long live data (180 days)

- Used for unknown or changing access pattern

- Files store in multiple Azs

- 99.9999999999 of durability

- Automatically moves the files to various classes based on usage (30 days data base)

- Monitoring storge and retrieval charge applicable

- Low cost than standard

- File store in a select AZ.

- 99.9999999999 of durability

- High performance storage for your very frequently accessed data

- Speed 10x faster than standard classes and cost 50% less

- you can use s3 Express one zone with service such as amazon sage maker model training amazon athena,Amazon EMR ,an AWS Glue data catalog to accelerate your machine learning analytics workload.

- An Amazon S3 lifecycle rule configured predefined actions to perform on objects during their lifetime

- You can create a lifecycle rule optimize your objects storage costs throughout their lifetime.

- You can define the scope of the lifecycle rule to all objects in your bucket or to objects with a shared prefix, certain object tags,or a certain object size

- An Amazon s3 lifecycle rule configures predefined actions to perform on objects during their lifetime.

- You can create a lifecycle rule to optimize you objects storage costs throughout their lifetime

- You can define the scope of the lifecycle rule to all objects in you bucket or to objects with a shared prefix certain object tags,or certain object size

--Delete the objects

S3 Standard-infrequent Access (s3 standard-IA)

S3 Glacier Flexible Retrieval(formerly S3 Glacier)

S3 Glacier Deep Archive

S3 Intelligent-tiering

S3 One zone -infrequent Access(s3 one zone -IA)

S3 Glacier Instant Retrieval

S3 Transfer Acceleration (S3TA)

Amazon S3 tranfer Acceleration (S3TA) is a bucket-level feature that enables fast,easy,and secure transfers of files over long distances between you client and an s3 bucket

It is a bucket level feature that enables fast,easy and secure transfers of files over long distances between you client and s3 bucket.

Transfer Acceleration can speed up content transfers to and from Amazon s3 by a much as 50-500% for long-distance transfer of larger objects

SRR CRR

Same region replication Cross region replication

- Replication is a process which enables automatic, asynchronous copying of objects across amazon s3 buckets

- Bucekets that are configured for objects replication can be owned by the same AWS account or by different accounts

- You can replicate objects to a single destination bucket or to multiple destination buckets,filter option also available.

- The destination buckets can be in different AWS regions or within the same region as the source bucket.

- You can replicate the existing and new objects to the destination.Replication will be stated immediately and asynchronously

- An IAM role with required permissions attached to the replication rule to get the access of buckets which are in the same region or different region or account