Terraform Lifecycle

Class 17th Terraform Lifecycle May2nd(Devops)

Terraform lifecycle(Create before destroy,prevent destroy,ignore changes)

Terraform modules( reusability/split the task to team wise)

Dynamic block(reduces the script)

Terraform locals

Variable precedence

Collections(For loops,set,list,map)

Built-in functions

Terraform Lifecycle

We can write the script in .tf file like block,resource block,output block,variable block..

Terraform lifecycle(Create before destroy,prevent destroy,ignore changes)

Terraform modules( reusability/split the task to team wise)

Dynamic block(reduces the script)

Terraform locals

Variable precedence

Collections(For loops,set,list,map)

Built-in functions

Terraform Lifecycle

We can write the script in .tf file like block,resource block,output block,variable block..

if any one did unnecessary change how to prevent them.

Step1:You see here create first and then destroy instance

[ec2-user@ip-172-31-20-61 CCIT]$ cat cloudinfra.tf

provider "aws" {

region = "eu-west-1"

}

#resource "aws_s3_bucket" "ccitbucket"{

# bucket = "ccitbucketvsm2025"

#}

resource "aws_instance" "ccitinst"{

ami =var.inst_ami

instance_type="t2.micro"

tags = {

Name="ccitinstance"

}

lifecycle{

create_before_destroy=true

}

}

variable "inst_ami" {}

[ec2-user@ip-172-31-20-61 CCIT]$ terraform apply -auto-approve -var="inst_ami=ami-09c39a45aca52b2d6"

Step2:it will destroy prevent from terraform script not from console level

[ec2-user@ip-172-31-20-61 CCIT]$ terraform destroy -auto-approve -var="inst_ami=ami-09c39a45aca52b2d6"

lifecycle{

prevent_destroy=true

}

Step3:it will ignore changes while modify tag and ami if you adde

ignore_changes = [all] --not accept any change

lifecycle {

ignore_changes = [tags,ami] }

Step4: sudo yam install tree ,it will give the file info of the folder

[ec2-user@ip-172-31-17-136 ccit]$ tree

.

├── cloudinfra.tf

├── terraform.tfstate

└── terraform.tfstate.backup

├── cloudinfra.tf

├── terraform.tfstate

└── terraform.tfstate.backup

Terraform module

Code reusability ,simply the code into file instead of dumping into main tf file ,split into each resources into separate modules all are .tf file only all information exist in the main tf file, best maintenance purpose used these module concept,each module will having separate plugins

Root modules ,child modules

Step1:

[ec2-user@ip-172-31-17-136 ccit]$ cat cloudinfra.tf

provider "aws"{

region = "eu-west-1"

}

resource "aws_vpc" "ccitvpc" {

cidr_block ="10.0.0.0/16"

tags ={

Name ="CCITVPC"

}

}

Step2: See all these resource single tf file ,it will grow rapidally, real time it was split separate .tf files [ec2-user@ip-172-31-17-136 ccit]$ cat cloudinfra.tf

provider "aws"{

region = "eu-west-1"

}

resource "aws_vpc" "ccitvpc" {

cidr_block ="10.0.0.0/16"

tags ={

Name ="CCITVPC"

}

}

[ec2-user@ip-172-31-17-136 ccit]$ cat cloudinfra.tf

provider "aws"{

region = "eu-west-1"

}

resource "aws_vpc" "ccitvpc" {

cidr_block ="10.0.0.0/16"

tags ={

Name ="CCITVPC"

}

}

resource "aws_s3_bucket" "ccitbucket" {

bucket = "ccitbucket-231"

}

resource "aws_instance" "ccitinst" {

ami = "ami-04e7764922e1e3a57"

instance_type="t2.micro"

tags= {

Name = "CCIT-INST"

}

}

provider "aws"{

region = "eu-west-1"

}

resource "aws_vpc" "ccitvpc" {

cidr_block ="10.0.0.0/16"

tags ={

Name ="CCITVPC"

}

}

resource "aws_s3_bucket" "ccitbucket" {

bucket = "ccitbucket-231"

}

resource "aws_instance" "ccitinst" {

ami = "ami-04e7764922e1e3a57"

instance_type="t2.micro"

tags= {

Name = "CCIT-INST"

}

}

Module

[ec2-user@ip-172-31-17-136 ccit]$ mkdir -p ./modules/vpc_module

[ec2-user@ip-172-31-17-136 ccit]$ mkdir -p ./modules/ec2_module

[ec2-user@ip-172-31-17-136 ccit]$ mkdir -p ./modules/s3_module

[ec2-user@ip-172-31-17-136 ec2_module]$ pwd

/home/ec2-user/ccit/modules/ec2_module

[ec2-user@ip-172-31-17-136 ec2_module]$ cat ec2infra.tf

resource "aws_instance" "ccitinst" {

ami = "ami-04e7764922e1e3a57"

instance_type="t2.micro"

tags= {

Name = "CCIT-INST"

}

/home/ec2-user/ccit/modules/ec2_module

[ec2-user@ip-172-31-17-136 ec2_module]$ cat ec2infra.tf

resource "aws_instance" "ccitinst" {

ami = "ami-04e7764922e1e3a57"

instance_type="t2.micro"

tags= {

Name = "CCIT-INST"

}

[ec2-user@ip-172-31-17-136 vpc_module]$ pwd

/home/ec2-user/ccit/modules/vpc_module

[ec2-user@ip-172-31-17-136 vpc_module]$ cat vpc_infra.tf

resource "aws_vpc" "ccitvpc" {

cidr_block ="10.0.0.0/16"

tags ={

Name ="CCITVPC"

}

/home/ec2-user/ccit/modules/vpc_module

[ec2-user@ip-172-31-17-136 vpc_module]$ cat vpc_infra.tf

resource "aws_vpc" "ccitvpc" {

cidr_block ="10.0.0.0/16"

tags ={

Name ="CCITVPC"

}

}

[ec2-user@ip-172-31-17-136 s3_module]$ cat s3_infra.tf

resource "aws_s3_bucket" "ccitbucket" {

bucket = "ccitbucket-231"

}

resource "aws_s3_bucket" "ccitbucket" {

bucket = "ccitbucket-231"

}

[ec2-user@ip-172-31-17-136 ccit]$ tree

.

├── cloudinfra.tf

├── modules

│ ├── ec2_module

│ │ └── ec2infra.tf

│ ├── s3_module

│ │ └── s3_infra.tf

│ └── vpc_module

│ └── vpc_infra.tf

├── terraform.tfstate

└── terraform.tfstate.backup

4 directories, 6 files

[ec2-user@ip-172-31-17-136 ccit]$ cd ..

[ec2-user@ip-172-31-17-136 ~]$ cd ccit

[ec2-user@ip-172-31-17-136 ccit]$ cd modules

[ec2-user@ip-172-31-17-136 modules]$ tree

.

├── ec2_module

│ └── ec2infra.tf

├── s3_module

│ └── s3_infra.tf

└── vpc_module

└── vpc_infra.tf

3 directories, 3 files

[ec2-user@ip-172-31-17-136 modules]$ cat /ccit/cloudinfra.tf

cat: /ccit/cloudinfra.tf: No such file or directory

[ec2-user@ip-172-31-17-136 modules]$ cat /ccit/cloudinfra.tf

cat: /ccit/cloudinfra.tf: No such file or directory

[ec2-user@ip-172-31-17-136 modules]$ cat /home/ec2-user/ccit/cloudinfra.tf

provider "aws"{

region = "eu-west-1"

}

module "vpc" {

source ="./module/vpc_module/"

}

module "s3" {

source ="./module/s3_module/"

}

module "ec2" {

source ="./module/ec2_module/"

}

[ec2-user@ip-172-31-17-136 ccit]$ terraform apply -auto-approve

│ Error: Module not installed

│

│ on cloudinfra.tf line 15:

│ 15: module "ec2" {

│

│ This module is not yet installed. Run "terraform init" to install all modules required by this configuration.

Step 3:

[ec2-user@ip-172-31-17-136 ccit]$ terraform init

Initializing the backend...

Initializing modules...

Initializing provider plugins...

Step4: Successfully create vpc,ec2 and bucket

[ec2-user@ip-172-31-17-136 ccit]$ terraform apply -auto-approve

module.ec2.aws_instance.ccitinst: Creating...

module.vpc.aws_vpc.ccitvpc: Creating...

module.s3.aws_s3_bucket.ccitbucket: Creating...

module.s3.aws_s3_bucket.ccitbucket: Creation complete after 1s [id=ccitbucket-231]

module.vpc.aws_vpc.ccitvpc: Creation complete after 2s [id=vpc-05605cbdad34a41c9]

module.ec2.aws_instance.ccitinst: Still creating... [10s elapsed]

module.ec2.aws_instance.ccitinst: Creation complete after 12s [id=i-093279d54f60bf4d5]

Apply complete! Resources: 3 added, 0 changed, 0 destroyed.

Step5:

[ec2-user@ip-172-31-17-136 ccit]$ terraform destroy -auto-approve

Destroy complete! Resources: 3 destroyed.

Step6: Source which is we called source="./module/vpc_module/", given local path instead of that

we can store the file in s3 bucket and call public url.

Created one sample bucket , Added vpc script file and uploaded

https://oracleask.blogspot.com/2025/04/class-8th-s3-storage-service-simple.html

Public url:https://ccitsamplebucket123.s3.eu-west-1.amazonaws.com/vpc_infra.tf

after change cloudinfra path to url getting error you need again terraform init

[ec2-user@ip-172-31-17-136 ccit]$ terraform apply -auto-approve

╷

│ Error: Module source has changed

│

│ on cloudinfra.tf line 8, in module "vpc":

│ 8: source ="https://ccitsamplebucket123.s3.eu-west-1.amazonaws.com/vpc_infra.tf"

│

│ The source address was changed since this module was installed. Run "terraform init" to install all modules required by this

│ configuration.

[ec2-user@ip-172-31-17-136 ccit]$ terraform init

Initializing the backend...

Initializing modules...

Downloading https://ccitsamplebucket123.s3.eu-west-1.amazonaws.com/vpc_infra.tf for vpc...

╷

│ Error: Failed to download module

│

│ on cloudinfra.tf line 6:

│ 6: module "vpc" {

│

│ Could not download module "vpc" (cloudinfra.tf:6) source code from

│ "https://ccitsamplebucket123.s3.eu-west-1.amazonaws.com/vpc_infra.tf": error downloading

│ 'https://ccitsamplebucket123.s3.eu-west-1.amazonaws.com/vpc_infra.tf': no source URL was returned

Step8: We can directly file put into zip file

Step9: after changes

source ="s3::https://ccitsamplebucket123.s3.eu-west-1.amazonaws.com/vpc_infra.zip"

[ec2-user@ip-172-31-17-136 ccit]$ terraform init

Initializing the backend...

Initializing modules...

Downloading s3::https://ccitsamplebucket123.s3.eu-west-1.amazonaws.com/vpc_infra.zip for vpc...

- vpc in .terraform/modules/vpc

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Using previously-installed hashicorp/aws v5.97.0

Step10:

[ec2-user@ip-172-31-17-136 ccit]$ terraform apply -auto-approve

Apply complete! Resources: 3 added, 0 changed, 0 destroyed.

Bucket created,Ec2 created,VPC created

Module sources(http,urls,s3bucket,local)

Dynamic block

Dynamic block in terraform a powerful feature that allow for the dynamic generation of nested blocks within resource , redundancy will reduce , just like for loop print 10,instead print 10 time

Step1:

[ec2-user@ip-172-31-17-136 ccit]$ cat cloudinfra.tf

provider "aws"{

region = "eu-west-1"

}

# Create a VPC with CIDR block 10.0.0.0/24

resource "aws_vpc" "CCITVPC" {

cidr_block = "10.0.0.0/25"

enable_dns_support = true

enable_dns_hostnames = true

tags = {

Name = "CCITVPC"

}

}

resource "aws_security_group" "CCIT_SG" {

vpc_id = aws_vpc.CCITVPC.id

name = "CCIT SG"

# Ingress rules defined manually

ingress {

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {Name = "CCIT SG"

}

}

Step2:

[ec2-user@ip-172-31-17-136 ccit]$ terraform apply -auto-approve

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

[ec2-user@ip-172-31-17-136 ccit]$ cat cloudinfra.tf

provider "aws" {

region = "eu-west-1"

}

locals { #collections

ingress_rules = [

{ port = 443, protocol = "tcp" },

{ port = 80, protocol = "tcp" },

{ port = 22, protocol = "tcp" },

{ port = 389, protocol = "tcp" }

]

}

# Create a VPC with CIDR block 10.0.0.0/24

resource "aws_vpc" "CCITVPC" {

cidr_block = "10.0.0.0/25"

enable_dns_support = true

enable_dns_hostnames = true

tags = {

Name = "CCITVPC"

}

}

resource "aws_security_group" "CCIT_SG" {

vpc_id = aws_vpc.CCITVPC.id

name = "CCIT SG"

# Ingress rules defined manually

dynamic "ingress" {

for_each = local.ingress_rules

content {

from_port = ingress.value.port

to_port = ingress.value.port

protocol = ingress.value.protocol

cidr_blocks = ["0.0.0.0/0"]

}

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = { Name = "CCIT SG"

}

}

[ec2-user@ip-172-31-17-136 ccit]$ terraform apply -auto-approve

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

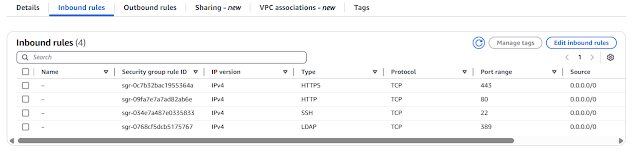

Step4: see here Dynamic block script added remaining port 22,389, reusable only single line adding , if required new port next time onward.

{ port = 389, protocol = "tcp" }..etc

--Thanks

No comments:

Post a Comment