Multipart Upload

Class 40th AWS Multipart Upload June9th

(AWS Recommended ,you are upload the file more than 100 MB ,the will be split chucks, finally join the chuck into single file that is best approach to upload s3 bucket.)

- Multipart upload allow users to break a single file into multiple part and upload them independently.

- You can upload these object parts independently and in any order. If transmission of any part fails, you can retransmit that part without affecting other parts

- After all parts of your object are uploaded, Amazon S3 assembles these parts and create the object.

- In general, when your object size reaches 100 MB, you should consider using multipart uploads instead of uploading the object in a single operation.

- You can split the file into 1000 Slices maximum. Each file must be 5 mb in size .Incomplete upload part remain in the S3 and AWS Charges for that.

Practical

Step1: Create one bucket "ccitmultipartupload1"

First choose the file 284 MB (split the file) using command, The command you need to run in git hub

$ split -b 50M video.mp4 video_part_

Administrator@DESKTOP-AV2PARO MINGW64 ~/Desktop/mulitpart

$ ls -lrt

total 1175740

-rw-r--r-- 1 Administrator 197121 298403108 Dec 12 11:06 video.mp4

-rw-r--r-- 1 Administrator 197121 607142013 Dec 15 09:04 video_20241215_085947.mp4

-rw-r--r-- 1 Administrator 197121 52428800 Jun 11 00:48 video_part_aa

-rw-r--r-- 1 Administrator 197121 52428800 Jun 11 00:48 video_part_ab

-rw-r--r-- 1 Administrator 197121 52428800 Jun 11 00:48 video_part_ac

-rw-r--r-- 1 Administrator 197121 52428800 Jun 11 00:48 video_part_ad

-rw-r--r-- 1 Administrator 197121 52428800 Jun 11 00:48 video_part_ae

-rw-r--r-- 1 Administrator 197121 36259108 Jun 11 00:48 video_part_af

Step2: Upload the files in the newly created bucket using Cli command

PS C:\Users\Administrator> aws configure

AWS Access Key ID [****************HWK4]: AKIATFBMO7H4BGIOIEW6

AWS Secret Access Key [****************uOLH]: 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

Default region name [eu-west-1]: eu-west-1

Default output format [None]: json

what is the use of this command means upload id will generate the key , based on the key we will join them into single file

PS C:\Users\Administrator\Desktop\mulitpart>aws s3api create-multipart-upload --bucket ccitmultipartupload2 --key video.mp4 --region eu-west-1

{

"ServerSideEncryption": "AES256",

"Bucket": "ccitmultipartupload2",

"Key": "video.mp4",

"UploadId": "XOdv_ADNJnTuS38S6iXGxorl0TFVpDFCGoEJ2FoO9wx0cpkIhrzMZks4phjX.y_1HUCWDBZKJ9LNO6sVEUOBSud6Y047fk3CcKI3mAhbo2r_QbK_T1DYkTUtj7jbs5Jl"

}

See here upload S3 bucket showing empty ,until you joining all part of the file the uploaded file will not shown

Step3:Part1

PS C:\Users\Administrator\Desktop\mulitpart> aws s3api upload-part --bucket ccitmultipartupload2 --key video.mp4 --part-number 1 --body C:\Users\Administrator\Desktop\mulitpart\Video_part_aa --upload-id XOdv_ADNJnTuS38S6iXGxorl0TFVpDFCGoEJ2FoO9wx0cpkIhrzMZks4phjX.y_1HUCWDBZKJ9LNO6sVEUOBSud6Y047fk3CcKI3mAhbo2r_QbK_T1DYkTUtj7jbs5Jl

{

"ServerSideEncryption": "AES256",

"ETag": "\"f6279bc6c5aa4efa009c0d599cd1b206\"",

Part2

PS C:\Users\Administrator\Desktop\mulitpart> aws s3api upload-part --bucket ccitmultipartupload2 --key video.mp4 --part-number 2 --body C:\Users\Administrator\Desktop\mulitpart\Video_part_ab --upload-id XOdv_ADNJnTuS38S6iXGxorl0TFVpDFCGoEJ2FoO9wx0cpkIhrzMZks4phjX.y_1HUCWDBZKJ9LNO6sVEUOBSud6Y047fk3CcKI3mAhbo2r_QbK_T1DYkTUtj7jbs5Jl

{

"ServerSideEncryption": "AES256",

"ETag": "\"5224ca5d459b8044bb554cfffdc4c122\"",

"ChecksumCRC64NVME": "VVdRkDOJyYg="

}

Part3

PS C:\Users\Administrator\Desktop\mulitpart> aws s3api upload-part --bucket ccitmultipartupload2 --key video.mp4 --part-number 3 --body C:\Users\Administrator\Desktop\mulitpart\Video_part_ac --upload-id XOdv_ADNJnTuS38S6iXGxorl0TFVpDFCGoEJ2FoO9wx0cpkIhrzMZks4phjX.y_1HUCWDBZKJ9LNO6sVEUOBSud6Y047fk3CcKI3mAhbo2r_QbK_T1DYkTUtj7jbs5Jl

{

"ServerSideEncryption": "AES256",

"ETag": "\"e9942afaa877e2a530da4b06d8eae9fb\"",

"ChecksumCRC64NVME": "9touXfRy/cc="

}

Part4

PS C:\Users\Administrator\Desktop\mulitpart> aws s3api upload-part --bucket ccitmultipartupload2 --key video.mp4 --part-number 4 --body C:\Users\Administrator\Desktop\mulitpart\Video_part_ad --upload-id XOdv_ADNJnTuS38S6iXGxorl0TFVpDFCGoEJ2FoO9wx0cpkIhrzMZks4phjX.y_1HUCWDBZKJ9LNO6sVEUOBSud6Y047fk3CcKI3mAhbo2r_QbK_T1DYkTUtj7jbs5Jl

{

"ServerSideEncryption": "AES256",

"ETag": "\"fcdd80466dd85133c87772e41123f3fa\"",

"ChecksumCRC64NVME": "tctu0jHQJ1A="

}

Part5

PS C:\Users\Administrator\Desktop\mulitpart>aws s3api upload-part --bucket ccitmultipartupload2 --key video.mp4 --part-number 5 --body C:\Users\Administrator\Desktop\mulitpart\Video_part_ae --upload-id XOdv_ADNJnTuS38S6iXGxorl0TFVpDFCGoEJ2FoO9wx0cpkIhrzMZks4phjX.y_1HUCWDBZKJ9LNO6sVEUOBSud6Y047fk3CcKI3mAhbo2r_QbK_T1DYkTUtj7jbs5Jl

{

"ServerSideEncryption": "AES256",

"ETag": "\"9318088f5b6055f1f5af8f69bf982b49\"",

"ChecksumCRC64NVME": "yjZxdkyCmm8="

}

Part6

PS C:\Users\Administrator\Desktop\mulitpart> aws s3api upload-part --bucket ccitmultipartupload2 --key video.mp4 --part-number 6 --body C:\Users\Administrator\Desktop\mulitpart\Video_part_af --upload-id XOdv_ADNJnTuS38S6iXGxorl0TFVpDFCGoEJ2FoO9wx0cpkIhrzMZks4phjX.y_1HUCWDBZKJ9LNO6sVEUOBSud6Y047fk3CcKI3mAhbo2r_QbK_T1DYkTUtj7jbs5Jl

{

"ServerSideEncryption": "AES256",

"ETag": "\"d602321594b8219b5d0a857a4e5d34cd\"",

"ChecksumCRC64NVME": "WSsI3fzEWg8="

}

Complete.jon file created in current directory Vedio.mp4 directory

{

"Parts": [

{ "ETag": "f6279bc6c5aa4efa009c0d599cd1b206", "PartNumber": 1 },

{ "ETag": "5224ca5d459b8044bb554cfffdc4c122", "PartNumber": 2 },

{ "ETag": "e9942afaa877e2a530da4b06d8eae9fb", "PartNumber": 3 },

{ "ETag": "fcdd80466dd85133c87772e41123f3fa", "PartNumber": 4 },

{ "ETag": "9318088f5b6055f1f5af8f69bf982b49", "PartNumber": 5 },

{ "ETag": "d602321594b8219b5d0a857a4e5d34cd", "PartNumber": 6 }

]

}

Final step (use the above Etags and UploadId)

-----------------------------------------------------------

aws s3api complete-multipart-upload --bucket ccitmultipartupload2 --key video.mp4 --upload-id XOdv_ADNJnTuS38S6iXGxorl0TFVpDFCGoEJ2FoO9wx0cpkIhrzMZks4phjX.y_1HUCWDBZKJ9LNO6sVEUOBSud6Y047fk3CcKI3mAhbo2r_QbK_T1DYkTUtj7jbs5Jl --multipart-upload "file://complete.json"

PS C:\Users\Administrator\Desktop\mulitpart> aws s3api complete-multipart-upload `

>> --bucket ccitmultipartupload2 `

>> --key video.mp4 `

>> --upload-id XOdv_ADNJnTuS38S6iXGxorl0TFVpDFCGoEJ2FoO9wx0cpkIhrzMZks4phjX.y_1HUCWDBZKJ9LNO6sVEUOBSud6Y047fk3CcKI3mAhbo2r_QbK_T1DYkTUtj7jbs5Jl `

>> --multipart-upload "file://complete.json"

{

"ServerSideEncryption": "AES256",

"Location": "https://ccitmultipartupload2.s3.eu-west-1.amazonaws.com/video.mp4",

"Bucket": "ccitmultipartupload2",

"Key": "video.mp4",

"ETag": "\"f5827b4132193b51faf9d0aa9d1768e1-6\"",

"ChecksumCRC64NVME": "JNuhFkcmQ8c=",

"ChecksumType": "FULL_OBJECT"

}

Full file uploaded successfully..

Using Phyton Program also we can do Split and upload the file to S3 bucket using below code

Step1: Delete the exist split files in the folder ,See here file path hut coded,use using flex using dynamically uploads

import boto3

from botocore.exceptions import NoCredentialsError, PartialCredentialsError

# AWS S3 credentials

ACCESS_KEY = 'AKIATFBMO7H4BGIOIEW6'

SECRET_KEY = '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'

BUCKET_NAME = 'ccitmultipartupload2'

REGION = 'eu-west-1'

def multipart_upload(file_path, bucket_name, key_name):

"""

Perform a multipart upload to S3.

Args:

file_path (str): Local path to the file to upload.

bucket_name (str): Target S3 bucket.

key_name (str): Target key in the S3 bucket.

"""

s3_client = boto3.client(

's3',

aws_access_key_id=ACCESS_KEY,

aws_secret_access_key=SECRET_KEY,

region_name=REGION

)

# Create a multipart upload

response = s3_client.create_multipart_upload(Bucket=bucket_name, Key=key_name)

upload_id = response['UploadId']

print(f"Multipart upload initiated with UploadId: {upload_id}")

try:

parts = []

part_number = 1

chunk_size = 50 * 1024 * 1024 # 50 MB chunks

# Read the file and upload in chunks

with open(file_path, 'rb') as file:

while True:

data = file.read(chunk_size)

if not data:

break

print(f"Uploading part {part_number}...")

part_response = s3_client.upload_part(

Bucket=bucket_name,

Key=key_name,

PartNumber=part_number,

UploadId=upload_id,

Body=data

)

parts.append({'PartNumber': part_number, 'ETag': part_response['ETag']})

part_number += 1

# Complete the multipart upload

print("Completing multipart upload...")

s3_client.complete_multipart_upload(

Bucket=bucket_name,

Key=key_name,

UploadId=upload_id,

MultipartUpload={'Parts': parts}

)

print("Multipart upload completed successfully!")

except Exception as e:

print(f"Error occurred: {e}")

print("Aborting multipart upload...")

s3_client.abort_multipart_upload(Bucket=bucket_name, Key=key_name, UploadId=upload_id)

print("Multipart upload aborted.")

# Example usage

file_path = 'C:/Users/Administrator/Desktop/mulitpart/video.mp4'

key_name = 'video.mp4'

multipart_upload(file_path, BUCKET_NAME, key_name)

PS C:\Users\Administrator\Desktop\mulitpart> python app.py

Multipart upload initiated with UploadId: 2hLmWOuhdZFPHj0z7bSm0fer3amVbXPnstLAeKx01UT.eG6tHuq7_2Ll32EalvWz8W3WKpo8_vSCuoSvZOQR.Hk9AlLDY_hsbKEpFsoeWDPyC0jMkqVCf5RFxTt4T5b.

Uploading part 1...

Uploading part 2...

Uploading part 3...

Uploading part 4...

Uploading part 5...

Uploading part 6...

Completing multipart upload...

Multipart upload completed successfully!

Step2:

Cross Origin resource Sharing (CORS)

Step1:Create one bucket while create bucket uncheck blocking all public access

ccitpublicbucket1

Step2: Usign Bucket policy generate give permission to the bucket

ccitpublicbucket1

and Then Click add statement >Generate policy

Copy the json

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Statement1",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:*",

"Resource": "arn:aws:s3:::ccitpublicbucket1/*"

}

]

}

Step3:

Click Save , Now the bucket is public any one can able to access the files in the bucket

Step4: Create one file source.html ,save with below code

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Source Page</title>

</head>

<body>

<h1>Source Page</h1>

<div id="data">

<p>This is some data in the source page.</p>

<p>More data here.</p>

</div>

</body>

</html>

Step4: Create one file Dest.html ,save with below code

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Read Data from Another Page</title>

</head>

<body>

<h1>Data from Another HTML Page</h1>

<button id="loadData">Load Data</button>

<div id="result"></div>

<script>

document.getElementById('loadData').addEventListener('click', () => {

fetch('https://ccitpublicbucket1.s3.eu-west-1.amazonaws.com/Source.html')

.then(response => response.text())

.then(htmlString => {

// Parse the HTML string into a DOM

const parser = new DOMParser();

const doc = parser.parseFromString(htmlString, 'text/html');

// Extract data from the parsed HTML

const data = doc.querySelector('#data').innerHTML;

// Display the extracted data

document.getElementById('result').innerHTML = data;

})

.catch(error => console.error('Error fetching data:', error));

});

</script>

</body>

</html>

Step5: Upload the file to source.html file to ccitpublicbucket1, the end point of the file copy that https://ccitpublicbucket1.s3.eu-west-1.amazonaws.com/Source.html

Step6: Dest.html fetch(https://ccitpublicbucket1.s3.eu-west-1.amazonaws.com/Source.html)

Change that file and save and then upload the dest.html to same public bucket

Step7:While click the endpoint source html source text

Source.html

Step8:Destnnation html, while click load data coming from text source html.

Step9: Create one more bucket create bucket default only unchecked blocking all public access ccitprivatebucket1

Step10: Bucket policy Click Save

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Statement1",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:*",

"Resource": "arn:aws:s3:::ccitprivatebucket1/*"

}

]

}

Step10: Upload only the dest.html file in the private bucket ccitprivatebucket1

open object url, click Loaddata ,As see getting error

Access to fetch at 'https://ccitpublicbucket1.s3.eu-west-1.amazonaws.com/Source.html' from origin 'https://ccitprivatebucket1.s3.eu-west-1.amazonaws.com' has been blocked by CORS policy: No 'Access-Control-Allow-Origin' header is present on the requested resource.

Origin is S3: ccitpublicbucket1 , This is different origin ccitprivatebucket1,so the reason not allowed

Step11: For Cross origin need to give permission to different origin

[

{

"AllowedHeaders": [

"*"

],

"AllowedMethods": [

"GET",

"POST",

"PUT"

],

"AllowedOrigins": [

"https://ccitprivatebucket1.s3.eu-west-1.amazonaws.com"

],

"ExposeHeaders": [

"x-amz-server-side-encryption",

"x-amz-request-id",

"x-amz-id-2"

],

"MaxAgeSeconds": 3000

}

]

ccitpublickbucket1>Permission>edit cross-origin give above code click save

See now data come from one origin to another origin ,This is only warning favicon ,just icon of url not provide just warning error.

Cloud Front(Global service)

Content Delivery network : We have hosted one static website below

While creating bucket uncheck the Block public access, that means allow outside public

below bucket policy added and index page upload

Step1: Static website ,in the ccitpublicbucket1 >permission >Bucket policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Statement1",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:*",

"Resource": "arn:aws:s3:::ccitpublicbucket1/*"

}

]

}

and then in Static website hosting and then save

upload the html static page to the bucket

Step2:Click the endpoint of the file, if are same region it will open quickly if you are in other region ,Canada ,usa , it will take time due Geographically distance to far

like this situation ,edge caches will help you speed up the website

Step3:For that aws we have cdn, cloud front need enabled ,where your edge location web site will be distributed there .

For ex:- if any access our static page(india) from canada, they edge location already distributed

they will the cache from near location not from India region,so latency will reduce speed the process

Select the ccitpublicbucket1

Step4:Enable Origin Shield,(Caching the website in the edge location, where you required) select No

Origin shield is an additional caching layer that can help reduce the load on your origin and help protect its availability.

Step5: CDN created successfully

--Done

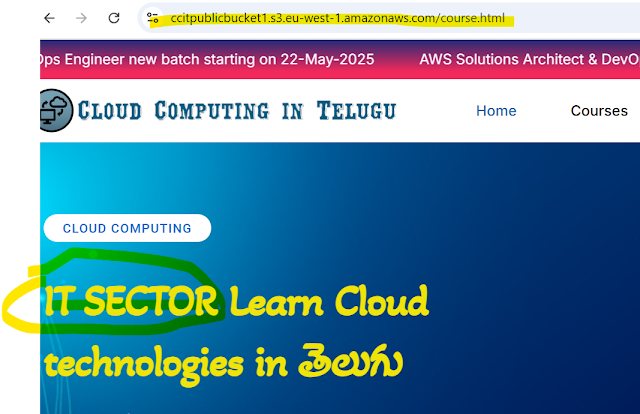

For Ex:-For Understanding ,course.html page edited the text "IT Sector" Save the file upload to public bucket,ccitpublicbucket1

Step1: Course.html just added text "IT SECTOR subbu"

Step2: Now you see the difference endpoint of the bucket join changes are reflected immediately

Step3: CDN or aws cloud front changes are not reflected immediately

Step4: For that you need enable one policy in the distribution Cache key and origin requests

Caching Optimized (it is default policy) Click view policy

As you see below

86400 Seconds it will refresh the edge location , if you want refresh immediately you need create you own policy

>Click create policy given policy name and give the values 30 second ,so edage location will refresh 30 second ,click create policy Attach the own policy which we have create above, choose ccitpolicy under cache policy click save changes

Private Bucket (without bucket public policy,the policy gave to distribution)

Step6: Create one more CDN using ccitprivatebucket1 this time

You must update the S3 bucket policy

CloudFront will provide you with the policy statement after creating the distribution.

here you can access file from private bucket using cloud front endpoint using policy

WAF enable click Create distribution

Step7:

{

"Version": "2008-10-17",

"Id": "PolicyForCloudFrontPrivateContent",

"Statement": [

{

"Sid": "AllowCloudFrontServicePrincipal",

"Effect": "Allow",

"Principal": {

"Service": "cloudfront.amazonaws.com"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::ccitprivatebucket1/*",

"Condition": {

"StringEquals": {

"AWS:SourceArn": "arn:aws:cloudfront::216989104632:distribution/E24UYTF97GKQCF"

}

}

}

]

}

Step8: above policy need ,give you ccitprivatebucket1 policy click save

ccitprivatebucket1 >permission >Bucket policy above json clode copy past click save

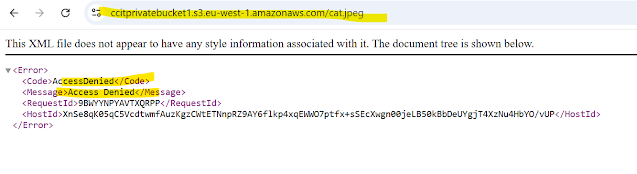

Step9: Distribution url able to access bucket images

Step10: As you see bucket endpoint getting access denied , we have given Cross origan policy to the bucket for the distribution,so the reason able access bucket object by distribution url

--Thanks